Steamlined and efficient artificial-intelligence assisted underwater video analysis

The Scottish Government seeks to develop demonstrably sustainable aquaculture which places enhanced emphasis on environmental protection […and] to reform and streamline regulatory processes so that development is more responsive, transparent and efficient. Our ‘SEA-AI’ research will enable the collection of high-quality seabed-imagery and its rapid, cost-effective analysis that will facilitate consistent and transparent decision-making to the benefit of the entire Scottish aquaculture sector and beyond. SEA-AI has the direct involvement of both industry and regulators.

Recent innovations in artificial intelligence (particularly convolutional neural nets, CNNs), have revolutionised image analysis.

Our challenge is to develop protocols and algorithms enabling cost-effective, accurate, rapid, and consistent seabed-image collection and analysis that will facilitate timely, transparent decision-making.

We will meet our challenge by developing standardised procedures for:

1. Image (data) capture ensuring that the imagery is of sufficient quality for CNN-assisted assessment

2. Video quality checking and the generation of corrected geo-referenced orthomosaics

3. The use of CNN machines to assist human experts in the identification of PMFs (both biotopes and key taxa). For example, here we are using a machine to identify the Northern Sea Fan in some challenging drop-camera footage: https://www.youtube.com/watch?v=aHDmTvjd3aw

Working with our partners, SEPA, Nature Scot and Scottish Sea Farms, we will embed these new approaches into the sector via SEPA/Nature Scot published guidance documentation and will make available the trained CNN to any user. By demonstrating the potential of CNNs, we will deliver a transformation in the demonstrably sustainable development of the global aquaculture sector including that based in Scotland.

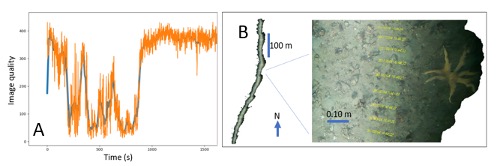

During SEA-AI we will deliver image quality assessment algorithms (A), and generate geo-referenced ortho-mosaics (B, survey track and detail from track illustrated).

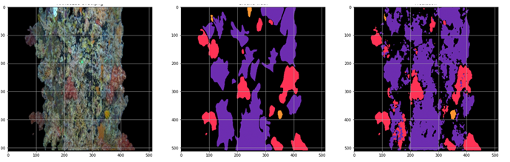

At SAMS we are continuing to develop AI machines to distinguish taxa: from raw images (left) we develop machines by training them on 20 – 100 annotated images (centre). The taught-machine is then assessed against the classification (shown as 3 separate colours in this model) it makes on images it has not previously ‘seen’ (right).

Role of SAMS on project

Developing deep-learning neural nets (artificial intelligence) to assist in underwater video characterisation